- Flaskサーバーで行っていたrinna/japanese-cloob-vit-b-16によるエンべディングの生成を、openai/clip-vit-large-patch14のONNXモデルを作成し、データベースにロードしてを生成するようにします。

- Pineconeで行っていたエンべディングの保存と検索を、Oracle Database 23aiのベクトル型の列にエンべディングを保存して検索するように変更します。

エンべディングを生成するONNXモデルの準備

podman exec -it oml4py-db bash

% podman exec -it oml4py-db bash

bash-4.4$

OML4Pyのクライアント側の環境を参照するように、環境変数を設定します。

export PYTHONHOME=$HOME/python

export PATH=$PYTHONHOME/bin:$PATH

export LD_LIBRARY_PATH=$PYTHONHOME/lib:$LD_LIBRARY_PATH

unset PYTHONPATH

bash-4.4$ export PYTHONHOME=$HOME/python

bash-4.4$ export PATH=$PYTHONHOME/bin:$PATH

bash-4.4$ export LD_LIBRARY_PATH=$PYTHONHOME/lib:$LD_LIBRARY_PATH

bash-4.4$ unset PYTHONPATH

bash-4.4$

pythonを起動します。

bash-4.4$ python3

Python 3.12.6 (main, Jun 20 2025, 05:48:10) [GCC 8.5.0 20210514 (Red Hat 8.5.0-26.0.1)] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>>

以下のコードを実行し、モデルopenai/clip-vit-large-patch14をONNX形式でエクスポートします。

import oml

from oml.utils import ONNXPipeline

pipeline = ONNXPipeline(model_name="openai/clip-vit-large-patch14")

pipeline.export2file("openai_clip_vit",output_dir="./work")

>>> import oml

>>> from oml.utils import ONNXPipeline

>>> pipeline = ONNXPipeline(model_name="openai/clip-vit-large-patch14")

>>> pipeline.export2file("openai_clip_vit",output_dir="./work")

tokenizer_config.json: 100%|███████████████████████████████| 905/905 [00:00<00:00, 5.34MB/s]

vocab.json: 100%|████████████████████████████████████████| 961k/961k [00:00<00:00, 2.19MB/s]

merges.txt: 100%|████████████████████████████████████████| 525k/525k [00:00<00:00, 1.18MB/s]

special_tokens_map.json: 100%|█████████████████████████████| 389/389 [00:00<00:00, 1.77MB/s]

tokenizer.json: 100%|██████████████████████████████████| 2.22M/2.22M [00:00<00:00, 5.65MB/s]

config.json: 100%|█████████████████████████████████████| 4.52k/4.52k [00:00<00:00, 14.8MB/s]

model.safetensors: 100%|███████████████████████████████| 1.71G/1.71G [03:02<00:00, 9.35MB/s]

preprocessor_config.json: 100%|████████████████████████████| 316/316 [00:00<00:00, 1.81MB/s]

/home/oracle/python/lib/python3.12/site-packages/transformers/models/clip/modeling_clip.py:282: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if seq_length > max_position_embedding:

/home/oracle/python/lib/python3.12/site-packages/transformers/modeling_attn_mask_utils.py:88: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if input_shape[-1] > 1 or self.sliding_window is not None:

/home/oracle/python/lib/python3.12/site-packages/transformers/modeling_attn_mask_utils.py:164: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if past_key_values_length > 0:

/home/oracle/python/lib/python3.12/site-packages/torch/onnx/symbolic_opset9.py:5383: UserWarning: Exporting aten::index operator of advanced indexing in opset 17 is achieved by combination of multiple ONNX operators, including Reshape, Transpose, Concat, and Gather. If indices include negative values, the exported graph will produce incorrect results.

warnings.warn(

UserWarning:Batch inference not supported in quantized models. Setting batch size to 1.

TracerWarning:Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

>>>

>>>

bash-4.4$ exit

exit

oml4py-on-db23ai-free % ls -l openai_clip_vit_*

-rw-r--r-- 1 ********** staff 306374876 6 20 17:27 openai_clip_vit_img.onnx

-rw-r--r-- 1 ********** staff 125759349 6 20 17:26 openai_clip_vit_txt.onnx

oml4py-on-db23ai-free %

sql sys/[SYSのパスワード]@localhost/freepdb1 as sysdba

% sql sys/*******@localhost/freepdb1 as sysdba

SQLcl: 金 6月 20 17:41:03 2025のリリース25.1 Production

Copyright (c) 1982, 2025, Oracle. All rights reserved.

接続先:

Oracle Database 23ai Free Release 23.0.0.0.0 - Develop, Learn, and Run for Free

Version 23.8.0.25.04

SQL>

create or replace directory onnx_import as '/opt/oracle/apex';

grant read,write on directory onnx_import to wksp_apexdev;

grant create mining model to wksp_apexdev;

grant execute on ctxsys.ctx_ddl to wksp_apexdev;

SQL> create or replace directory onnx_import as '/opt/oracle/apex';

Directory ONNX_IMPORTは作成されました。

SQL> grant read,write on directory onnx_import to wksp_apexdev;

Grantが正常に実行されました。

SQL> grant create mining model to wksp_apexdev;

Grantが正常に実行されました。

SQL> grant execute on ctxsys.ctx_ddl to wksp_apexdev;

Grantが正常に実行されました。

SQL> exit

Oracle Database 23ai Free Release 23.0.0.0.0 - Develop, Learn, and Run for Free

Version 23.8.0.25.04から切断されました

%

ONNXモデルをロードします。最初はイメージ・モデルを対象にします。

begin

dbms_vector.load_onnx_model(

directory => 'ONNX_IMPORT'

,file_name => 'openai_clip_vit_img.onnx'

,model_name => 'OPENAI_CLIP_VIT_IMG'

);

end;

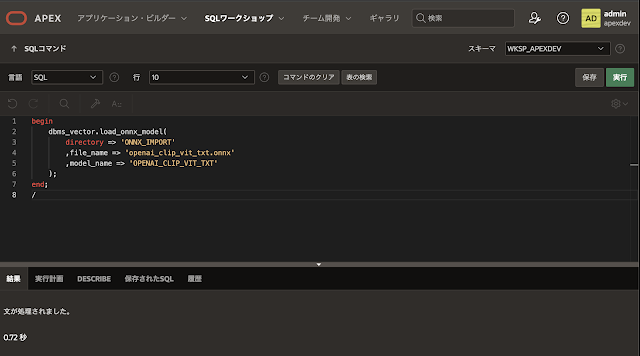

/begin

dbms_vector.load_onnx_model(

directory => 'ONNX_IMPORT'

,file_name => 'openai_clip_vit_txt.onnx'

,model_name => 'OPENAI_CLIP_VIT_TXT'

);

end;

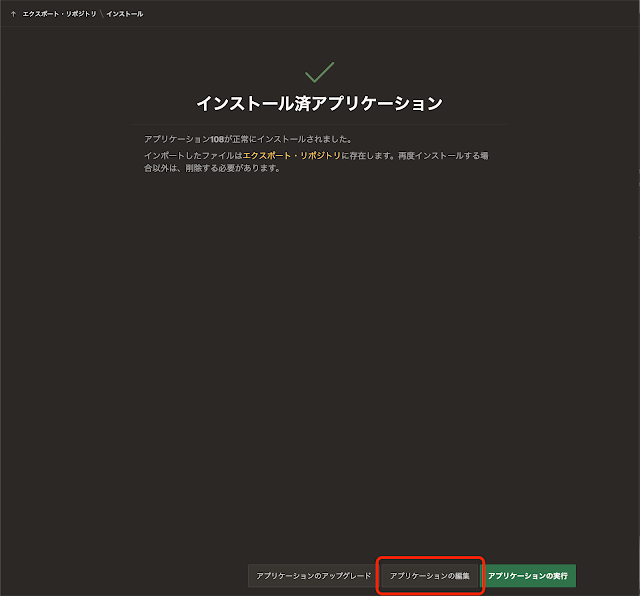

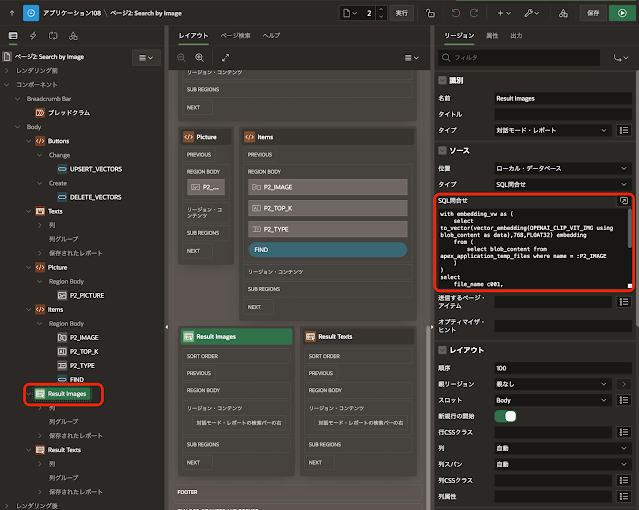

/マルチモーダル検索を行うAPEXアプリケーションの更新

- Oracle Cloudのオブジェクト・ストレージに作成したバケットに、検索対象となるJPEGの画像データが保存されている。

- オブジェクト・ストレージにアクセスするためのOCIのユーザー、ポリシー、クリデンシャルなどが作成済みである。

select blob_content, null, filename, mime_type

from apex_application_temp_files

where name = :P2_IMAGE