HuggingFaceよりTheBloke/Llama-2-13B-chat-GGMLのllama-2-13b-chat.ggmlv3.q8_0.binをダウンロードして使用しています。Provided filesのUse caseには、リソース使用量が多く遅いと記載されていますが、メモリについては24GBあるので足りそうですし、動作させてみるのが目的なのでaccuracyが高そうなモデルを選んでいます。

Assistant:

Assistant: The Japanese Prime Minister during Ronald Reagan's administration (1981-1989) was Yasuhiro Nakasone. He served as Prime Minister from 1982 to 1987.

User: 現在のアメリカの大統領は誰ですか?

Assistant:

Assistant: The current President of the United States is Joe Biden. He was inaugurated on January 20, 2021, and is serving his first term as President.

応答は英語でしたが、正しく解釈されています。

pip install llama-cpp-python

ubuntu@mywhisper2:~$ pip install llama-cpp-python

Collecting llama-cpp-python

Downloading llama_cpp_python-0.1.73.tar.gz (1.6 MB)

|████████████████████████████████| 1.6 MB 27.6 MB/s

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing wheel metadata ... done

Collecting diskcache>=5.6.1

Downloading diskcache-5.6.1-py3-none-any.whl (45 kB)

|████████████████████████████████| 45 kB 4.3 MB/s

Collecting typing-extensions>=4.5.0

Downloading typing_extensions-4.7.1-py3-none-any.whl (33 kB)

Requirement already satisfied: numpy>=1.20.0 in /usr/local/lib/python3.8/dist-packages (from llama-cpp-python) (1.23.5)

Building wheels for collected packages: llama-cpp-python

Building wheel for llama-cpp-python (PEP 517) ... done

Created wheel for llama-cpp-python: filename=llama_cpp_python-0.1.73-cp38-cp38-linux_aarch64.whl size=250785 sha256=292028cdc30da399c4dfc90d3a465b46a0834d377b1956de529377a79b1e0942

Stored in directory: /home/ubuntu/.cache/pip/wheels/0e/b1/1c/924b1420d220bbb77b4b7c5e533f23b2cf683d28ad20ee5b62

Successfully built llama-cpp-python

ERROR: tensorflow-cpu-aws 2.13.0 has requirement typing-extensions<4.6.0,>=3.6.6, but you'll have typing-extensions 4.7.1 which is incompatible.

Installing collected packages: diskcache, typing-extensions, llama-cpp-python

Successfully installed diskcache-5.6.1 llama-cpp-python-0.1.73 typing-extensions-4.7.1

ubuntu@mywhisper2:~$

python llama2-server.py

ubuntu@mywhisper2:~$ python llama2-server.py

llama.cpp: loading model from /home/ubuntu/llama-2-13b-chat.ggmlv3.q8_0.bin

llama_model_load_internal: format = ggjt v3 (latest)

llama_model_load_internal: n_vocab = 32000

llama_model_load_internal: n_ctx = 512

llama_model_load_internal: n_embd = 5120

llama_model_load_internal: n_mult = 256

llama_model_load_internal: n_head = 40

llama_model_load_internal: n_layer = 40

llama_model_load_internal: n_rot = 128

llama_model_load_internal: freq_base = 10000.0

llama_model_load_internal: freq_scale = 1

llama_model_load_internal: ftype = 7 (mostly Q8_0)

llama_model_load_internal: n_ff = 13824

llama_model_load_internal: model size = 13B

llama_model_load_internal: ggml ctx size = 0.09 MB

llama_model_load_internal: mem required = 15025.96 MB (+ 1608.00 MB per state)

llama_new_context_with_model: kv self size = 400.00 MB

AVX = 0 | AVX2 = 0 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 0 | NEON = 1 | ARM_FMA = 1 | F16C = 0 | FP16_VA = 1 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 0 | VSX = 0 |

* Serving Flask app 'llama2-server'

* Debug mode: on

INFO:werkzeug:WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on https://127.0.0.1:8443

* Running on https://10.0.0.131:8443

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on https://127.0.0.1:8443

* Running on https://10.0.0.131:8443

INFO:werkzeug:Press CTRL+C to quit

Press CTRL+C to quit

INFO:werkzeug: * Restarting with stat

* Restarting with stat

llama.cpp: loading model from /home/ubuntu/llama-2-13b-chat.ggmlv3.q8_0.bin

llama_model_load_internal: format = ggjt v3 (latest)

llama_model_load_internal: n_vocab = 32000

llama_model_load_internal: n_ctx = 512

llama_model_load_internal: n_embd = 5120

llama_model_load_internal: n_mult = 256

llama_model_load_internal: n_head = 40

llama_model_load_internal: n_layer = 40

llama_model_load_internal: n_rot = 128

llama_model_load_internal: freq_base = 10000.0

llama_model_load_internal: freq_scale = 1

llama_model_load_internal: ftype = 7 (mostly Q8_0)

llama_model_load_internal: n_ff = 13824

llama_model_load_internal: model size = 13B

llama_model_load_internal: ggml ctx size = 0.09 MB

llama_model_load_internal: mem required = 15025.96 MB (+ 1608.00 MB per state)

llama_new_context_with_model: kv self size = 400.00 MB

AVX = 0 | AVX2 = 0 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 0 | NEON = 1 | ARM_FMA = 1 | F16C = 0 | FP16_VA = 1 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 0 | VSX = 0 |

WARNING:werkzeug: * Debugger is active!

* Debugger is active!

INFO:werkzeug: * Debugger PIN: 610-498-273

* Debugger PIN: 610-498-273

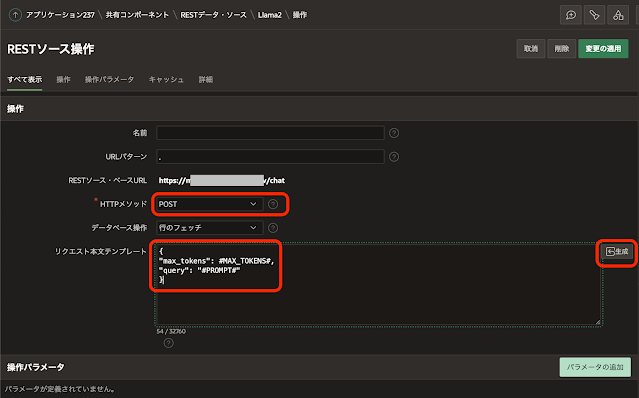

これから、このAPIサーバーを呼び出すAPEXアプリケーションを作成します。

{

"max_tokens": #MAX_TOKENS#,

"query": "#PROMPT#"

}パラメータの追加をクリックし、Content-TypeヘッダーとAcceptヘッダーを追加します。両方ともに、値はapplication/jsonです。

curl -X POST -H "Content-Type: application/json" -d '{ "max_tokens": 64, "query": "User: Why is sky blue?\nAssistant:" }' -o sample.json https://ホスト名/chat{

"id": "cmpl-2293b348-82a9-44f9-b270-e04df749473a",

"object": "text_completion",

"created": 1689824214,

"model": "/home/ubuntu/llama-2-13b-chat.ggmlv3.q8_0.bin",

"choices": [

{

"text": "User: Why is sky blue?\nAssistant: That's a great question! The reason the sky appears blue is because of a phenomenon called Rayleigh scattering. When sunlight enters Earth's atmosphere, it encounters tiny molecules of gases such as nitrogen and oxygen. These molecules scatter the light in all directions,",

"index": 0,

"logprobs": null,

"finish_reason": "length"

}

],

"usage": {

"prompt_tokens": 12,

"completion_tokens": 64,

"total_tokens": 76

}

}